This project was to research and implement a system to enable a mobile manipulator robot to autonomously drive to a specified location. A human operator specifies the goal waypoint by selecting a position within the robot’s map of the environment. The robot will compute the shortest path from its current position to the goal, taking into account any known static obstacles on the map. Then the robot will navigate to the goal whilst avoiding any other unknown dynamic obstacles that may appear along the path.

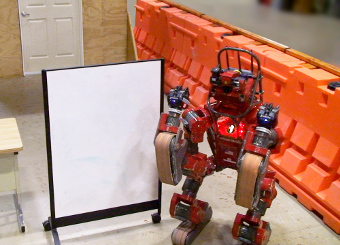

The system was tested on CHIMP (CMU Highly Intelligent Mobile Platform), a robot that was designed to perform maintenance tasks in dangerous environments [1]. During the DARPA Robotics Challenge an operator controlled the robot via joystick and keyboard. However it is tedious and slow for a human to drive the robot safely due to the inherent latency of the human-to-robot perception and control loop.

Ideally the operator would specify high-level navigation instructions to the robot,

such as:

- “Drive through doorway”

- “Explore the adjacent room”

- “Go to building A”

- “Drive over to that shut-off valve”

In these scenarios an existing map might be available, derived from architectural drawings. Alternatively an aerial drone could survey the area or CHIMP can build a new map as it explores the environment using SLAM (Simultaneous Localization and Mapping).

Robot platform:

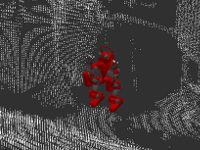

On CHIMP’s head are multiple perception sensors. A pair of spinning Hokuyo LiDAR sensors provides a 360° view of the environment. The perception system outputs a coherent point cloud, with minimal “smear” of the LiDAR points, by compensating the robot’s relative motion using an accurate localization system. The IMU, GPS and LiDAR are fused together to compute the robot’s pose in a globally consistent reference frame.

Approach:

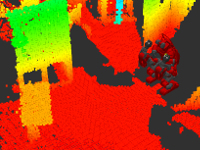

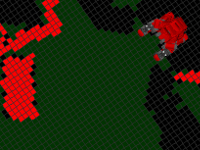

The environment is modeled in 3D using a voxel grid (Fig. 3). The filtered point cloud is used to update the local area of the voxel grid by ray-tracing from the sensor origin to each LiDAR point. The 3D voxel grid is used elsewhere for object manipulation, however a more efficient 2D representation is used for the navigation map. The voxel grid is flattened down onto the ground plane to make a 2D occupancy grid (Fig. 4), where each cell is labelled as occupied, empty or unknown.

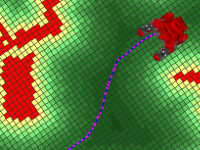

A discrete approach is used for path planning. The robot’s footprint is approximated by a circle circumscribed around the widest diameter, which is important because CHIMP’s arms hang beyond its tracks. The occupancy grid is converted to a discrete cost map (Fig. 5) using the method from [2]. However, insteading of using two path planners, the recently-developed Field-D* planner [3] was used. This planner uses interpolation along the edges of each grid cell to create smoother paths (Fig. 5) than other discrete planners like A* or D*.

For simplicity the robot’s drive system is modelled using a simple differential-drive motion model. The robot tracks the path output from Field D* using the pure pursuit method [4] to control the heading angle and forward velocity.

Results:

The robot’s maximum forward velocity was capped to ensure stability while making sharp turns. An improvement would be to implement a second local planner using dynamic motion primitives, similar to [2]. This would enable CHIMP to drive faster on straight sections or large-radius turns.

The update-rate for the voxel grid is limited by the rotation speed of the LiDAR. Waiting for one complete LiDAR scan introduces significant latency that affects CHIMP’s ability to stop when confronted by an unexpected dynamic obstacle. Iteratively updating the voxel grid using each LiDAR “strip” would improve the response time for collision detection.

This work was conducted with help from my colleagues in the Tartan Rescue team at Carnegie Mellon University

and NREC (National Robotics Engineering Center).

| References: |

|

[1] G. C. Haynes, D. Stager, A. Stentz, B. Zajac, D. Anderson, D. Bennington, J. Brindza, D. Butterworth, C. Dellin, M. George, J. Gonzalez-Mora, M. Jones, P. Kini, M. Laverne, N. Letwin, E. Perko, C. Pinkston, D. Rice, J. Scheifflee, K. Strabala, J. M. Vande Weghe, M. Waldbaum, R. Warner, E. Meyhofer, A. Kelly and H. Herman, “Developing a Robust Disaster Response Robot: CHIMP and the Robotics Challenge”, Journal of Field Robotics, Vol. 34, No. 2, March 2017.

|

|

[2] E. Marder-Eppstein, E. Berger, T. Foote, B. Gerkey and K. Konolige, “The Office Marathon: Robust Navigation in an Indoor Office Environment”, IEEE International Conference on Robotics and Automation (ICRA), June 2010.

|

|

[3] D. Ferguson and A. Stentz, “The Field D* Algorithm for Improved Path Planning and Replanning in Uniform and Non-Uniform Cost Environments”, Technical Report CMU-RI-TR-05-19, June 2015.

|

|

[4] R. C. Coulter, “Implementation of the Pure Pursuit Path Tracking Algorithm”, Technical Report CMU-RI-TR-92-01, January 1992.

|